K is negative when agreement is worse than chance.Īfter you have clicked the Test button, the program will display the value for Kappa with its Standard Error and 95% confidence interval (CI) (Fleiss et al., 2003).K is 0 when there is no agreement better than chance.K is 1 when there is perfect agreement between the classification systems.If the difference between the first and second category is less important than a difference between the second and third category, etc., use quadratic weights.Īgreement is quantified by the Kappa ( K) statistic (Cohen, 1960 Fleiss et al., 2003):

Use linear weights when the difference between the first and second category has the same importance as a difference between the second and third category, etc. When there are 5 categories, the weights in the linear set are 1, 0.75, 0.50, 0.25 and 0 when there is a difference of 0 (=total agreement) or 1, 2, 3 and 4 categories respectively. In the linear set, if there are k categories, the weights are calculated as follows: MedCalc offers two sets of weights, called linear and quadratic. If the data come from a nominal scale, do not select Weighted Kappa.

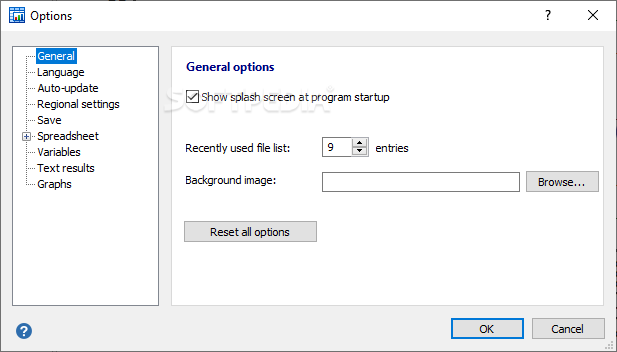

Next enter the number of observations in each cell of the data table.First select the number of categories in the classification system - the maximum number of categories is 12.Use Inter-rater agreement to evaluate the agreement between two classifications (nominal or ordinal scales).

0 kommentar(er)

0 kommentar(er)